Jiacheng Chen

Welcome!

I am an PhD student at the CSE department in The Chinese University of Hong Kong, where I am fortunate to be advised by Prof. Yu Cheng and Prof. Weiyang Liu. I also work closely with Dr. Ganqu Cui and Prof. Ning Ding. I received barchelor degree from South China University of Technology, where I was fortunate to be advised by Prof. Yue-Jiao Gong. I was a visiting researcher at Caltech, where I was honored to be advised by Prof. Yisong Yue and Dr. Kaiyu Yang. I currently / previously intern at MiniMax and Shanghai AI Lab.

My research interests include,

- Reasoning in NLP

- Reinforcement Learning

🔥 News

- 2025.10: 🎉🎉 We introduce the P1 Series! P1 is a series of models that designed to tackle Olympiad-level physics reasoning, check the blog for more details!

- 2025.05: 🎉🎉 Our paper Entropy Mechanism of Reinforcement Learning for Large Language Model Reasoning has released!

- 2024.05: 🎉🎉 SYMBOL will be presented in ICLR 2024 as a poster!

- 2023.12: 🎉🎉 MetaBox will be presented in Neurips 2023 as the oral presentation!

📝 Publications

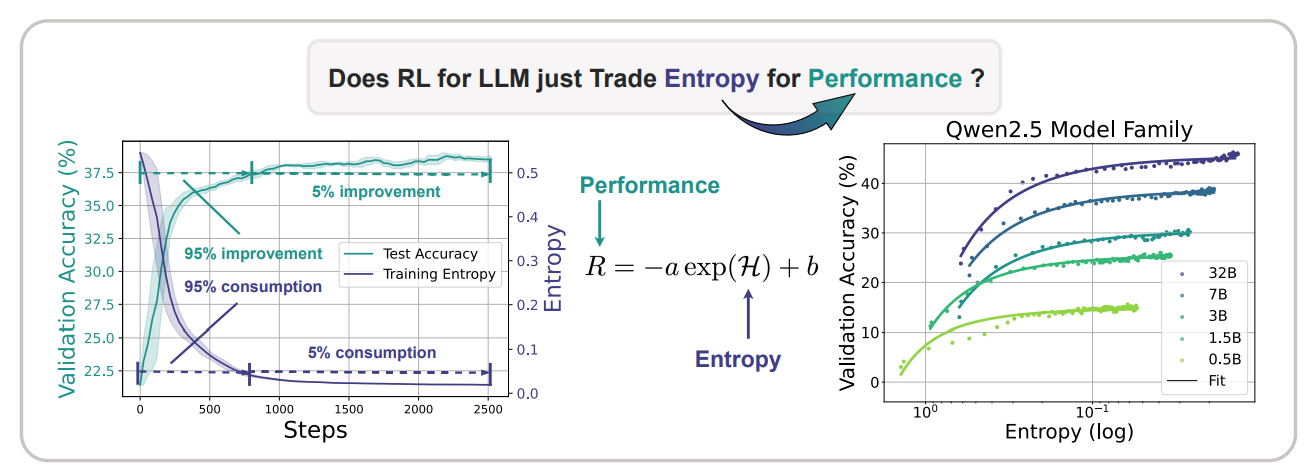

The Entropy Mechanism of Reinforcement Learning for Reasoning Language Models

Ganqu Cui*, Yuchen Zhang*, Jiacheng Chen*, Lifan Yuan, Zhi Wang, Yuxin Zuo, Haozhan Li, Yuchen Fan, Huayu Chen, Weize Chen, Zhiyuan Liu, Hao Peng, Lei Bai, Wanli Ouyang, Yu Cheng, Bowen Zhou, Ning Ding.

- We conducted empirical and theoretical analysis at “entropy collapse” phenomena, and proposed new way of entropy control.

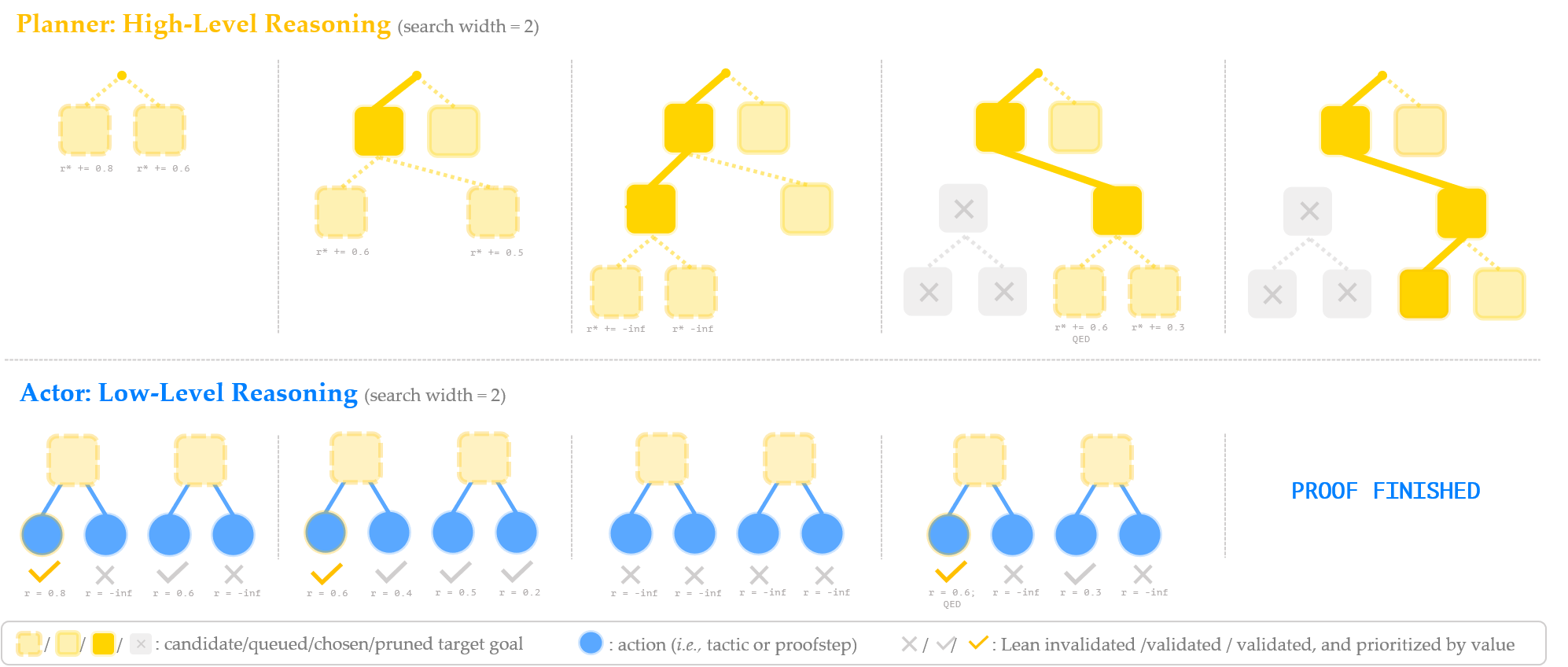

Reasoning in Reasoning: A Hierarchical Framework for Neural Theorem Proving (NeurIPS 2024 Workshop MATH-AI)

Ziyu Ye, Jiacheng Chen, Jonathan Light, Yifei Wang, Jiankai Sun, Mac Schwager, Philip Torr, Guohao Li, Yuxin Chen, Kaiyu Yang, Yisong Yue, Ziniu Hu.

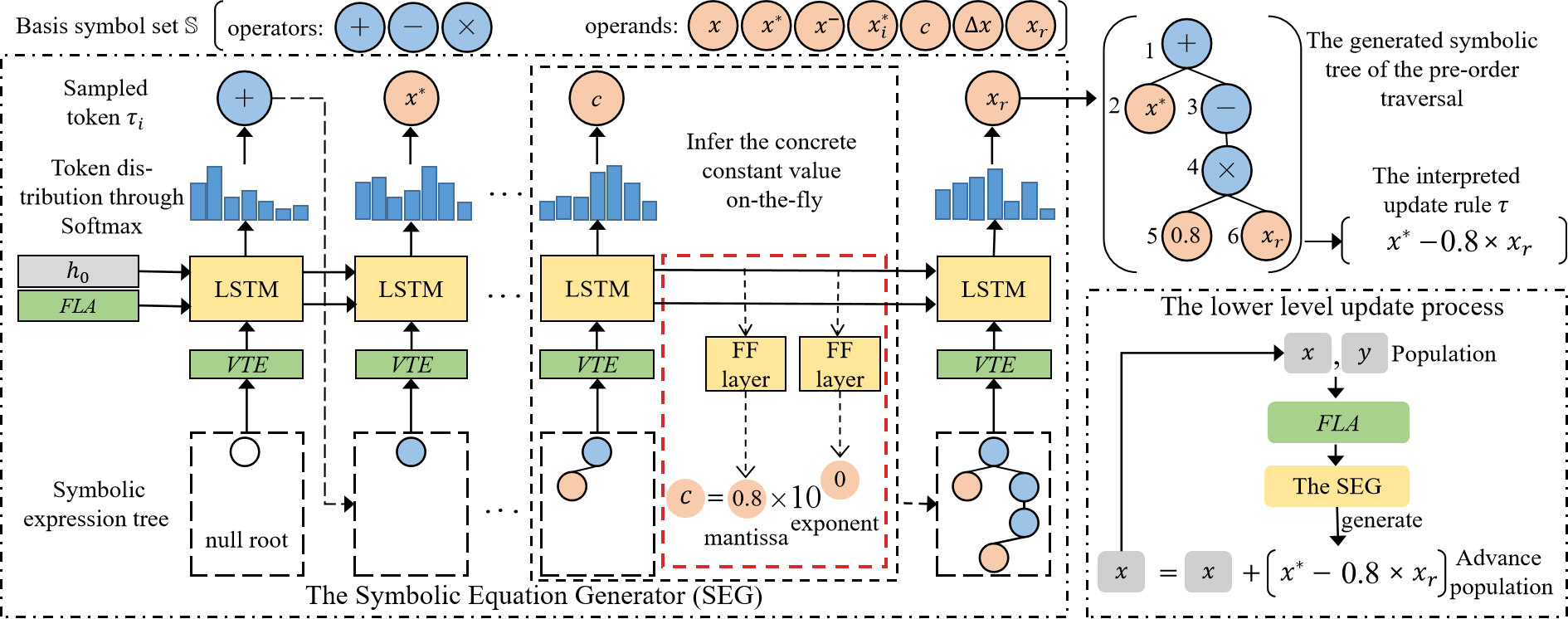

SYMBOL: Generating Flexible Black-Box Optimizers through Symbolic Equation Learning (ICLR 2024)

Jiacheng Chen*, Zeyuan Ma*, Hongshu Guo, Yining Ma, Jie Zhang, Yue-Jiao Gong.

- Unlike previous methods incrementally auto-configuring some existing black-box algortithms, SYMBOL directly generate stepwise update rule in the form of symbolic eqution to achieve more flexible and interpretable optimization behaviour.

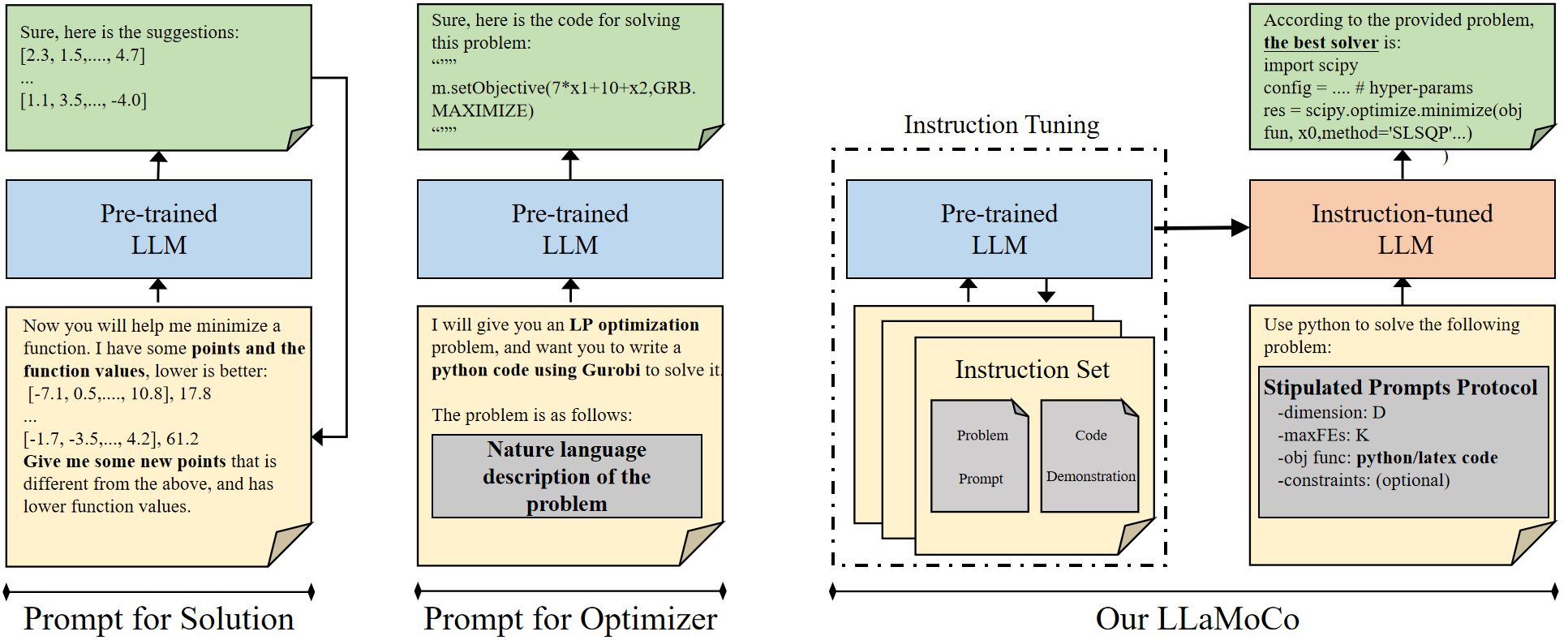

LLaMoCo: Instruction Tuning of Large Language Models for Optimization Code Generation

Zeyuan Ma, Hongshu Guo, Jiacheng Chen, Guojun Peng, Zhiguang Cao, Yining Ma, Yue-Jiao Gong.

- We propose to fine-tune language model to generate executable code that can be used for optimization tasks. We proposed a dataset that containing diversed optimization problems and corresponding algorithm in this paper, also leverage some tricks during training process and finally provided a fine-tuned LM for optimization tasks.

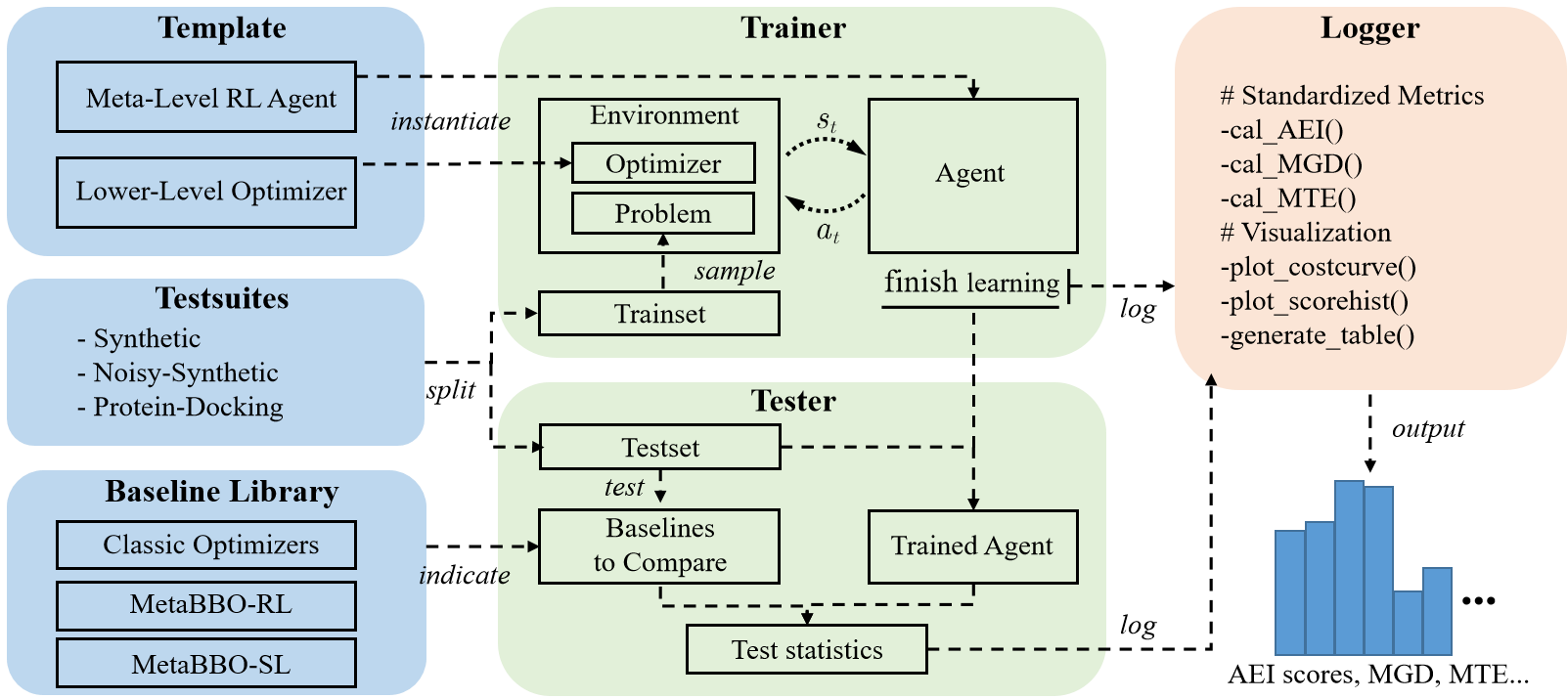

MetaBox: A Benchmark Platform for Meta-Black-Box Optimization with Reinforcement Learning (Neurips 2023 Oral)

Zeyuan Ma, Hongshu Guo, Jiacheng Chen, Zhenrui Li, Guojun Peng, Yue-Jiao Gong, Yining Ma, Zhiguang Cao.

- We released a benchmark platform for Meta-Black-Box Optimization named MetaBox. We integrate three different testsuits, about 20 baselines including traditional black-box methods and Meta-Black-Box methods, and new evaluation metrics tailored for Meta-Black-Box optimization. The codebase can be found here.

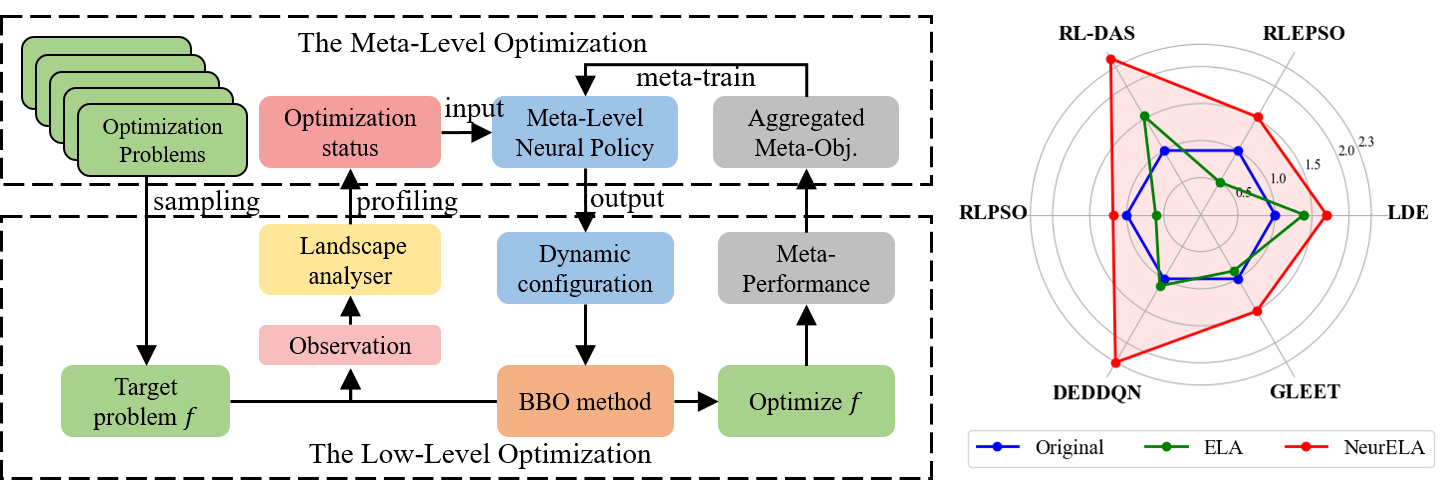

Neural Exploratory Landscape Analysis (ICLR 2025)

Zeyuan Ma, Jiacheng Chen, Hongshu Guo, Yue-Jiao Gong.

- We developed an Neural-Network based lanscape analyser to replace the feature-extracting parts in Meta-Black-Box works which is usually manually designed. To ensure the generalization ability of the NeurELA, we let it operate in Multi-task setting and use neuroevolution to train it.

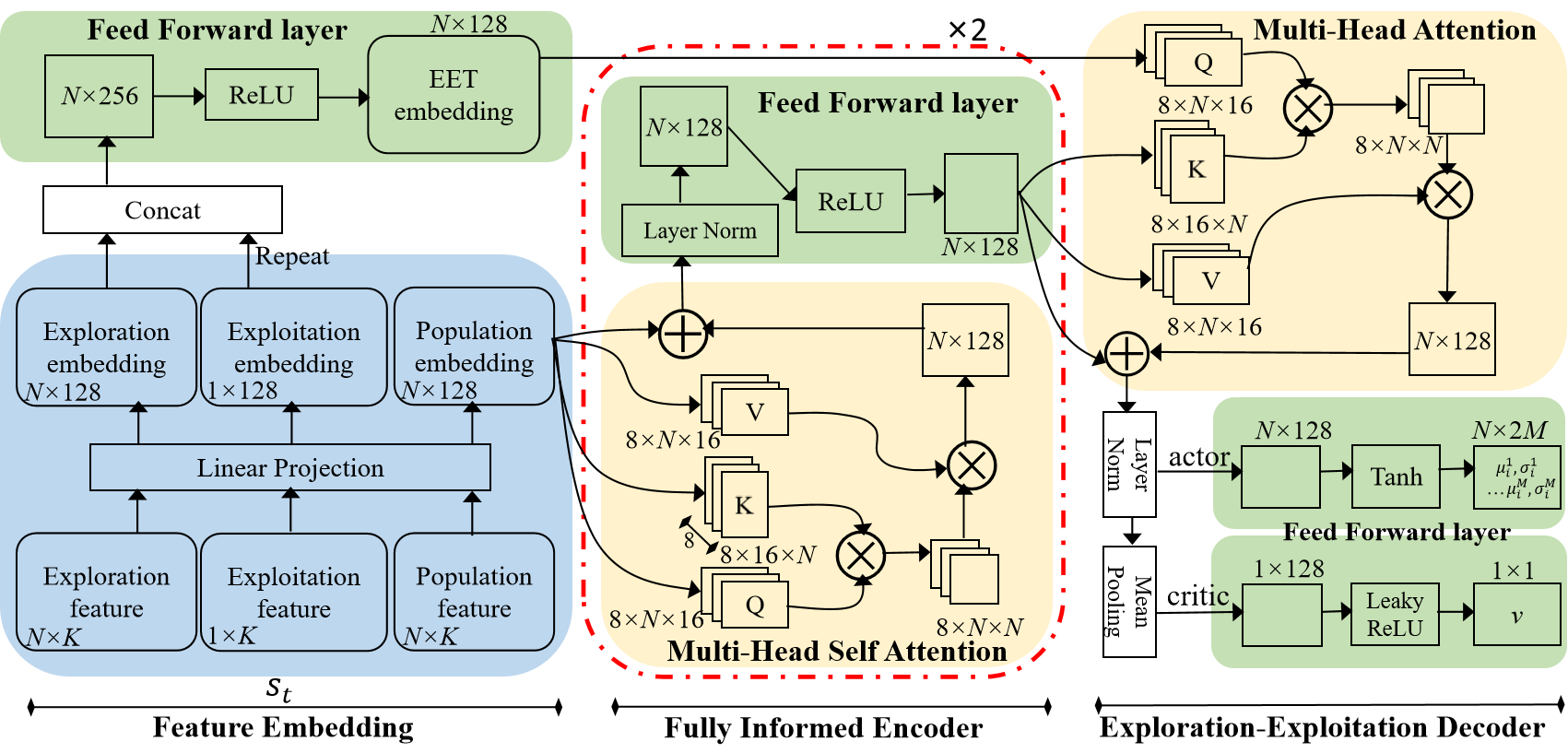

Auto-configuring Exploration-Exploitation Tradeoff in Evolutionary Computation via Deep Reinforcement Learning (GECCO 2024)

Zeyuan Ma*, Jiacheng Chen*, Hongshu Guo, Yining Ma, Yue-Jiao Gong.

- We explore about how to make a trade-off between exploration and exploitation in Black-Box optimization through learn-based method. In this work, we carefully designed a framework which is based on transfromer-based model and leverage exploration-exploitation related feature tailored for black-box optimization scenario to resolve this problem.

- ConfigX: Modular Configuration for Evolutionary Algorithms via Multitask Reinforcement Learning, Hongshu Guo*, Zeyuan Ma*, Jiacheng Chen, Yining Ma,Zhiguang Cao, Xinglin Zhang, Yue-Jiao Gong, AAAI 2025 (Oral)

- Deep Reinforcement Learning for Dynamic Algorithm Selection: A Proof-of-Principle Study on Differential Evolution, Hongshu Guo, Yining Ma, Zeyuan Ma, Jiacheng Chen, Xinglin Zhang, Zhiguang Cao, Jun Zhang, Yue-Jiao Gong, GECCO 2024

🎖 Honors and Awards

- 2024, Caltech Summer Undergraduate Research Fellowship (SURF).

📖 Educations

- 2025.10 - present, PhD, Department of Computer Science and Engineering, The Chinese University of Hong Kong.

- 2021.09 - 2025.06, B.E., School of Computer Science and Technology, South China University of Technology.

💻 Research Experience

- 2024.06 - 2024.08, SURF, Caltech.

- Thesis: AI for Math.

- Advisor: Prof. Yisong Yue and Dr. Kaiyu Yang.

- 2022-03 - 2023.03, SRP, SCUT.

- Thesis: Meta Black Box Optimization.

- Advisor: Prof. Yue-Jiao Gong